Load Google Drive CSV into Pandas DataFrame for Google Colaboratory

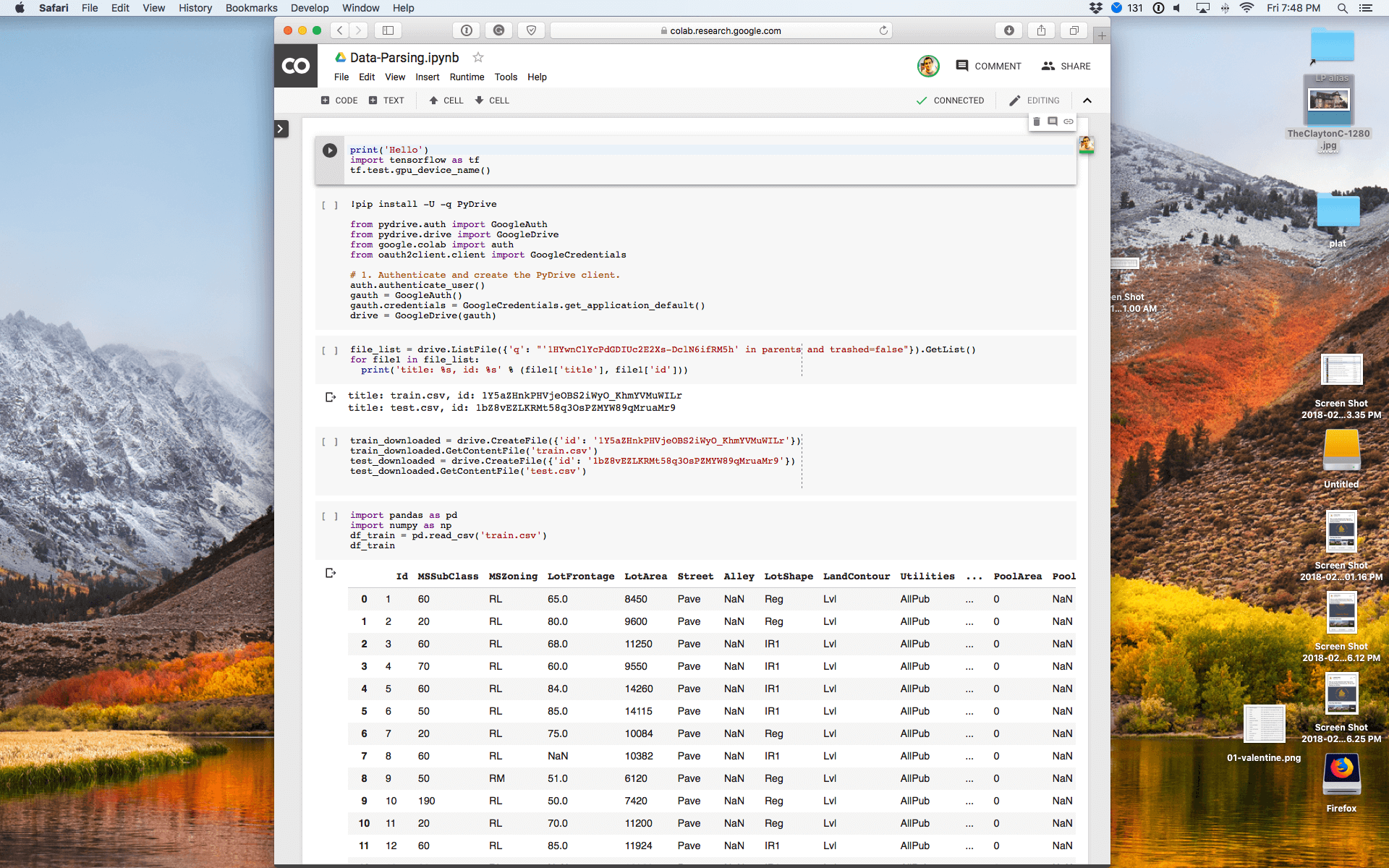

Researching platforms for running common machine learning algorithms on advertising keyword data, I found Google Colaboratory. It’s essentially a machine learning environment with accessible Jupyter notebooks…but without all the headaches of setting up your own dev environment. Mainly for educational and research purposes, I wanted to try it out with a small data set.

Of course, it’s never quite that easy. Out the door I needed to figure out how to load up a train.csv and test.csv stored in Google Drive into the Jupyter notebook, and more specifically a Pandas DataFrame. There are instructions but they are intermingled with other things I didn’t care about.

Here is a quick rundown of Juypter code to load existing CSV files stored in Google Drive into Google Colab.

| |

This loads up TensorFlow and displays what GPU is being used. If it returns nothing, go to Runtime -> Change Runtime Type, change Hardware accelerator to GPU and hit save. Then re-run the code above. The result should be:

| |

If so, you are ready to move on.

| |

| |

The above code installs PyDrive which will be used to access Google Drive and kicks off the process to authorize the notebook running in the Google Colaboratory environment to touch your files. When it runs, you will be presented with a link to click on, which asks to verify Google Colab can have access to Google Drive and unique key. Enter the key back in the notebook.

| |

This code assumes your CSV files are in a folder. It will print out the files in a folder and their unique identifiers which will be used below. Replace

| |

The output will be a list of files in the specified folder and their ids.

| |

Now the files get pulled into Google Colab. GetContentFile saves the files in the local environment and sets the names of the files.

| |

Now comes the easy part. Since the files have been saved to the local environment, load up a saved file, by its filename, into a DataFrame and print out a few lines to verify.

| |

Repeat as needed.